Coded Fairness Project | Master Thesis

Enabling a bias-sensitive development process of machine learning systems

––

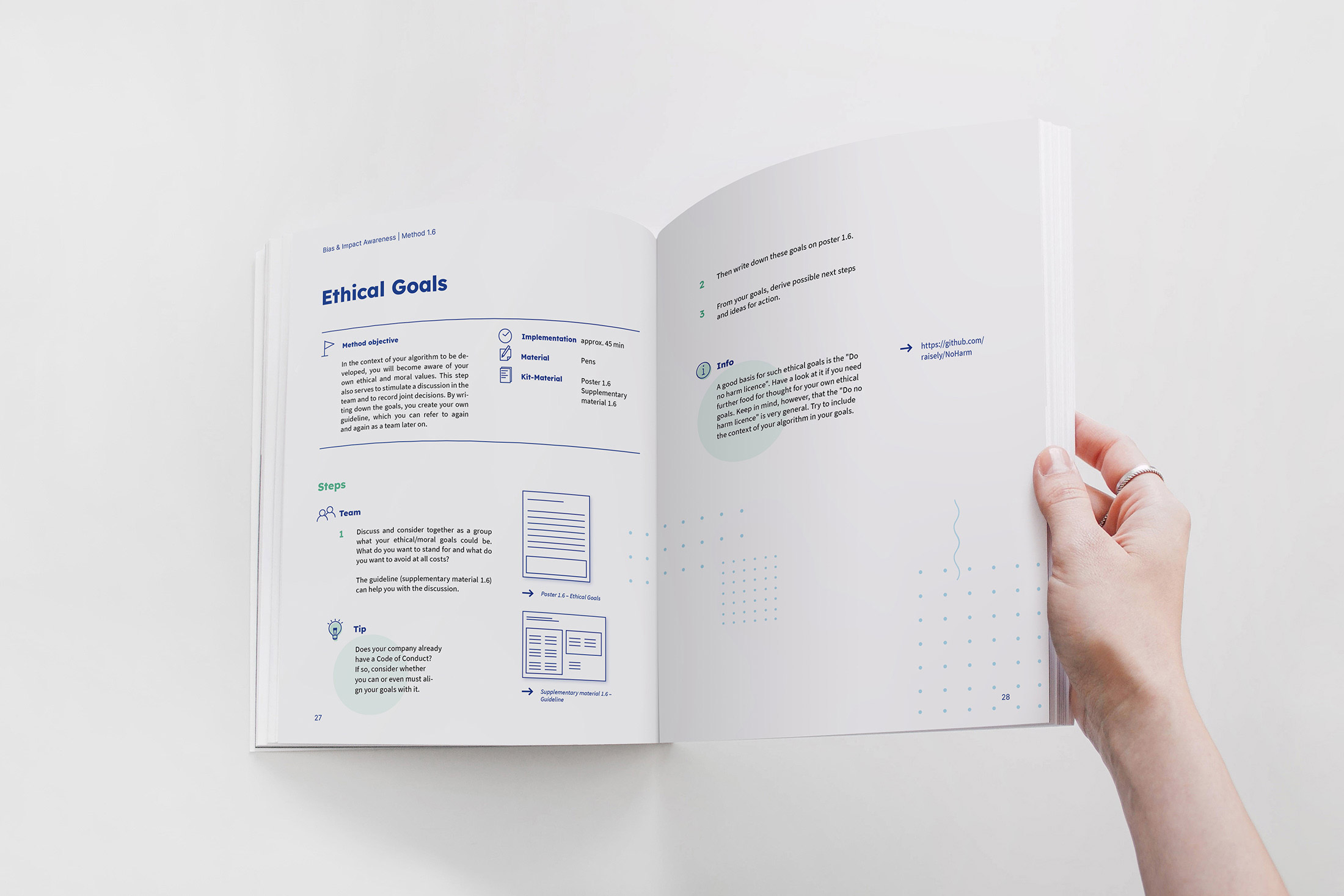

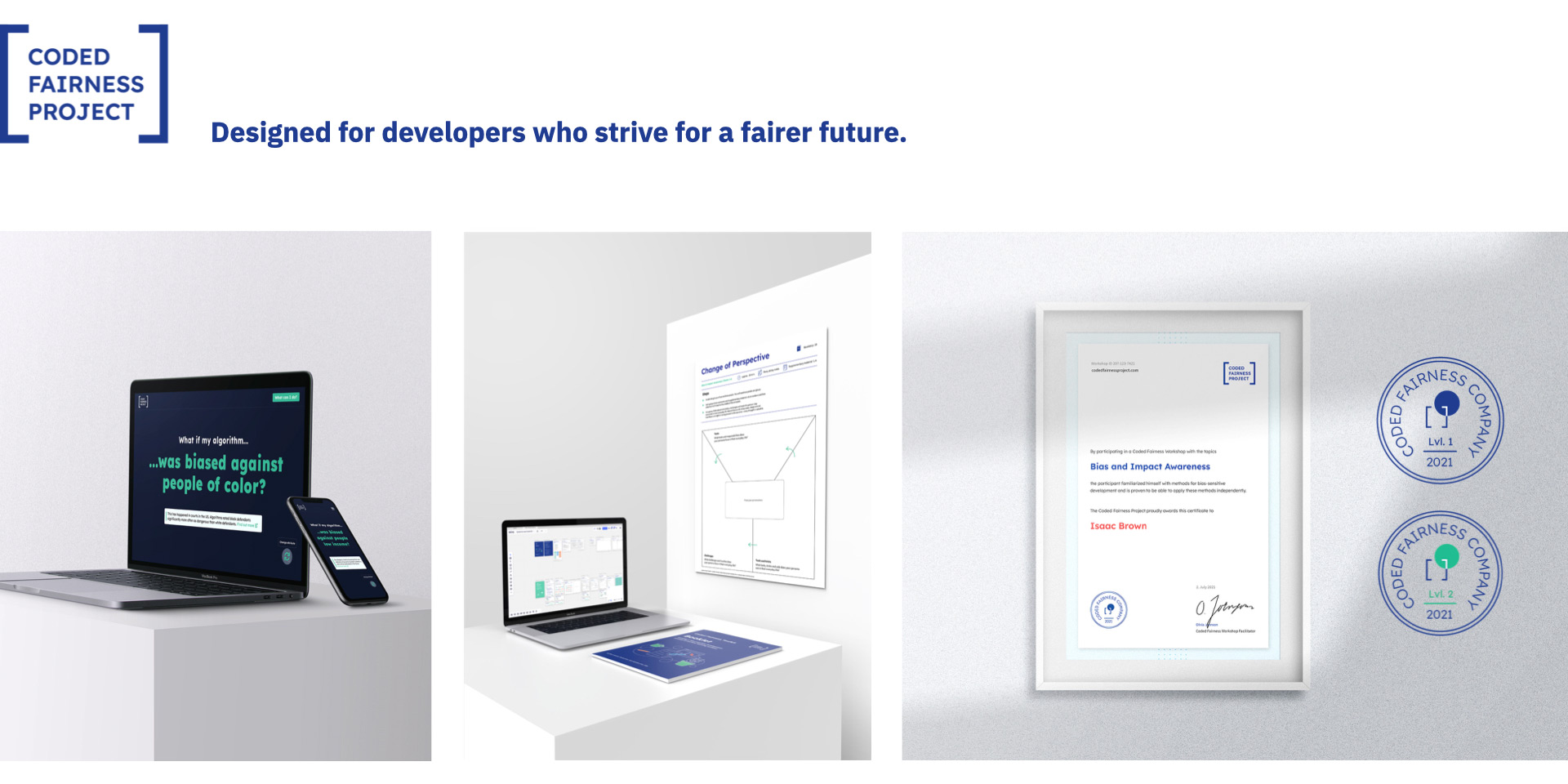

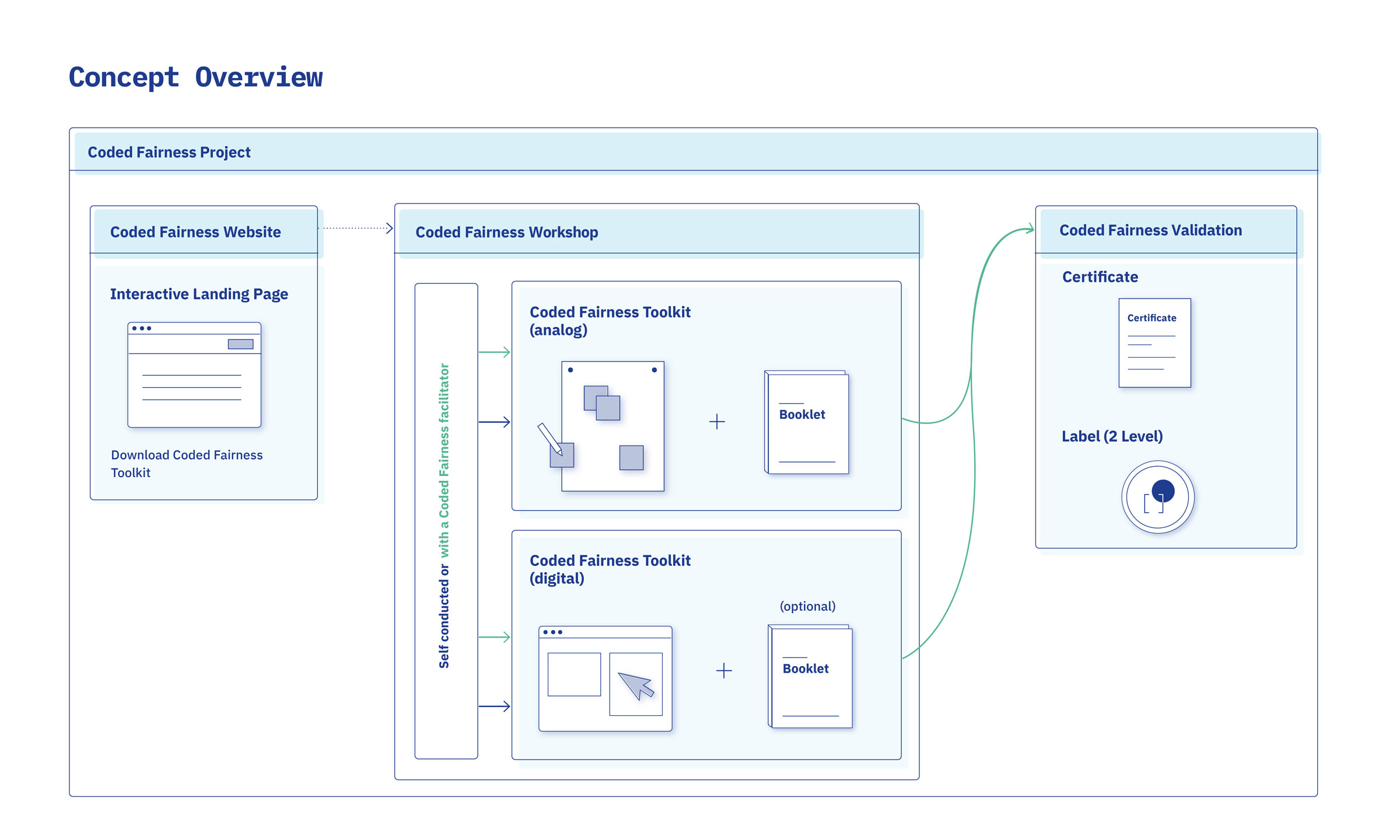

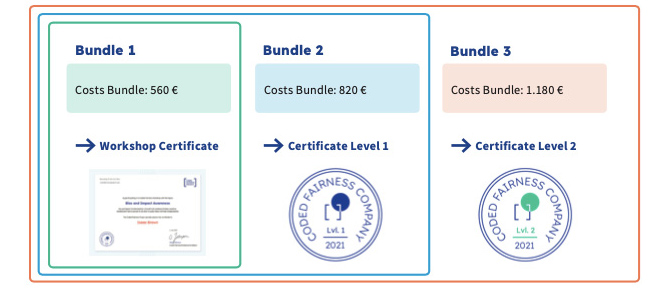

The Coded Fairness Project combines methods that promote bias-sensitive development of machine learning systems with discursive approaches to how to support such efforts within a company. The methods can then be applied in the form of a workshop by the people involved in the system’s development. The enclosed booklet provides background information, hints and instructions to support the implementation.

Machine learning is being used in more and more industries to solve complex problems. And although machine learning often represents actual added value, its use can also have undesirable side effects. Discrimination by algorithms is already an everyday problem, and as a result, old prejudices deeply rooted in our society are transferred and scaled into a new medium. The reason for this are the biases found within the algorithms.

Our solution includes a toolkit, consisting of printable templates for method posters and an accompanying booklet to conduct a method workshop.

-> What are biases and how can the Coded Fairness Project help?

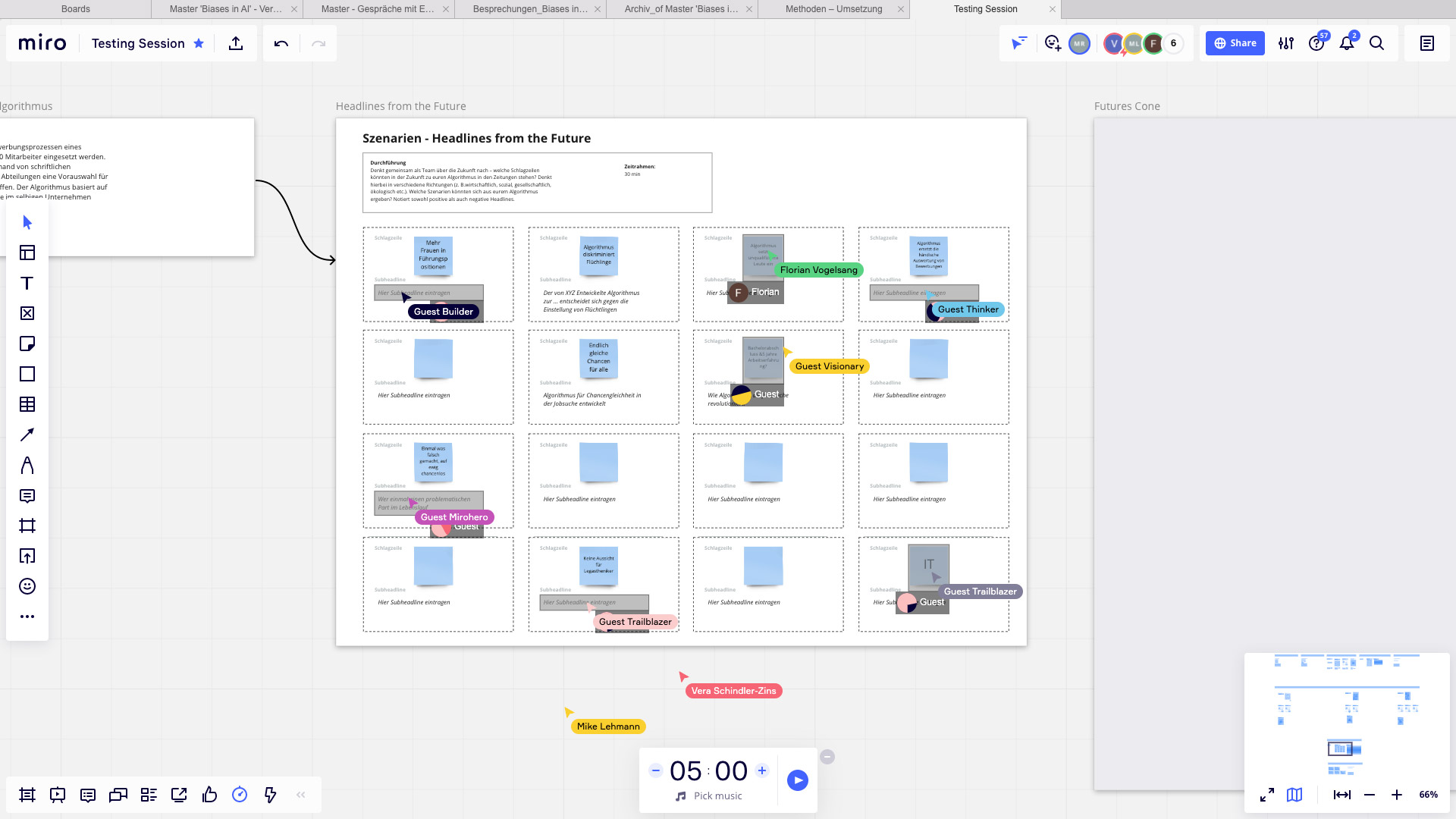

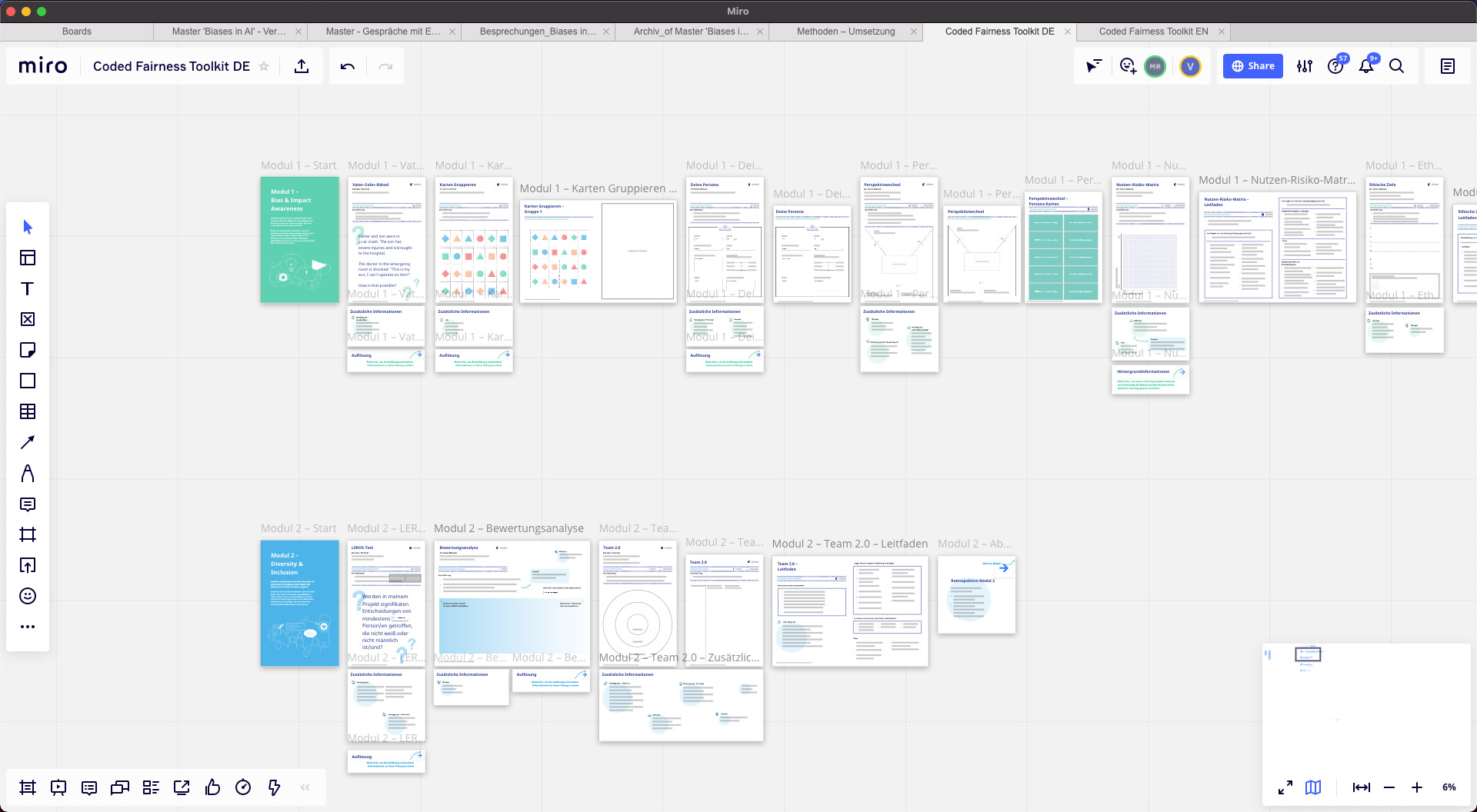

In addition to an analog implementation of the methods, we offer a digital implementation. By providing a Miro board template, we not only make it easy to conduct the methods collaboratively and digitally, but also to conduct them remotely. (Miro is a collaborative online whiteboard application.) Both versions are available free of charge on our website and the Miroverse, which is the official Miro template library.

Our Approach

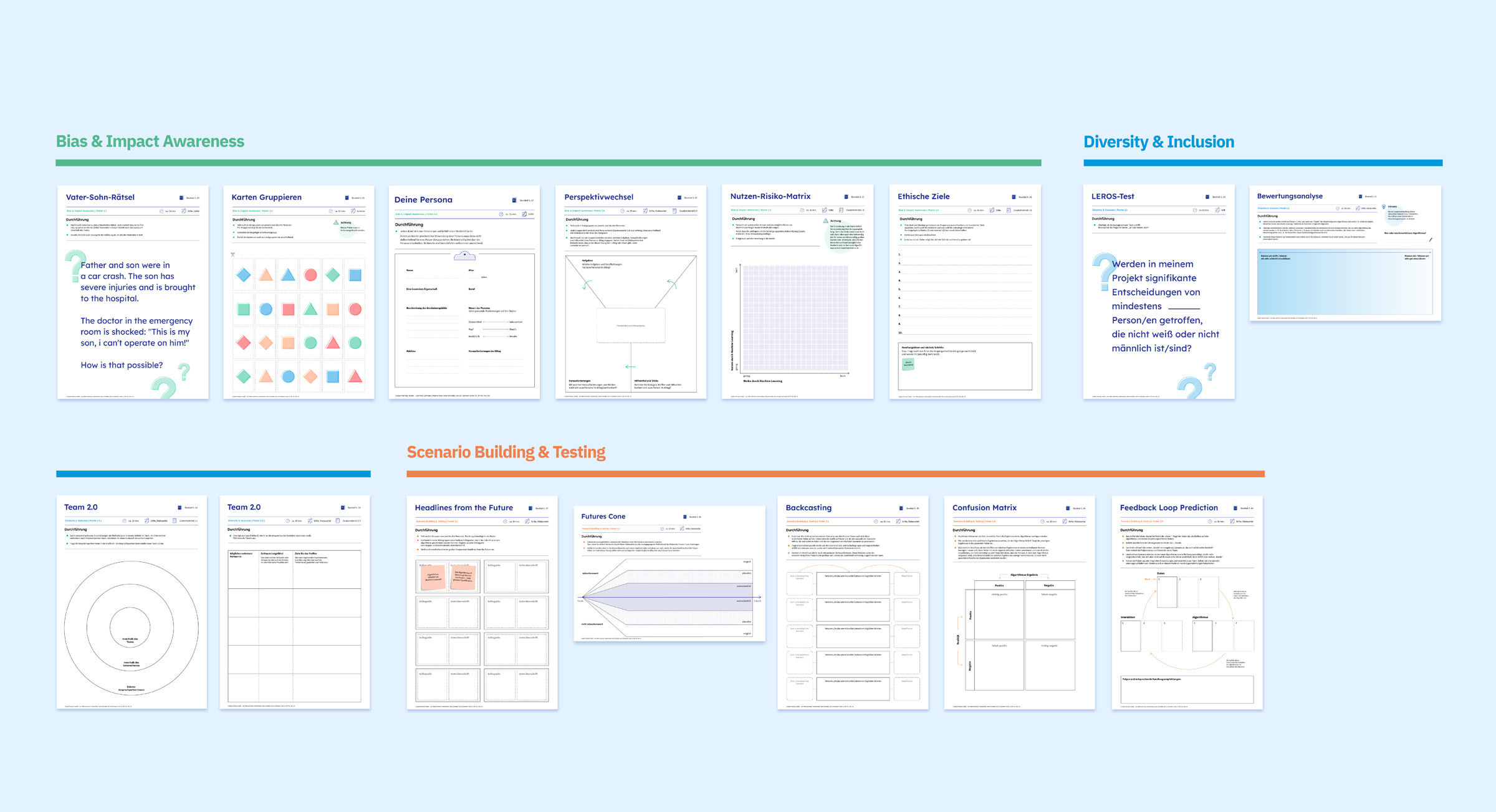

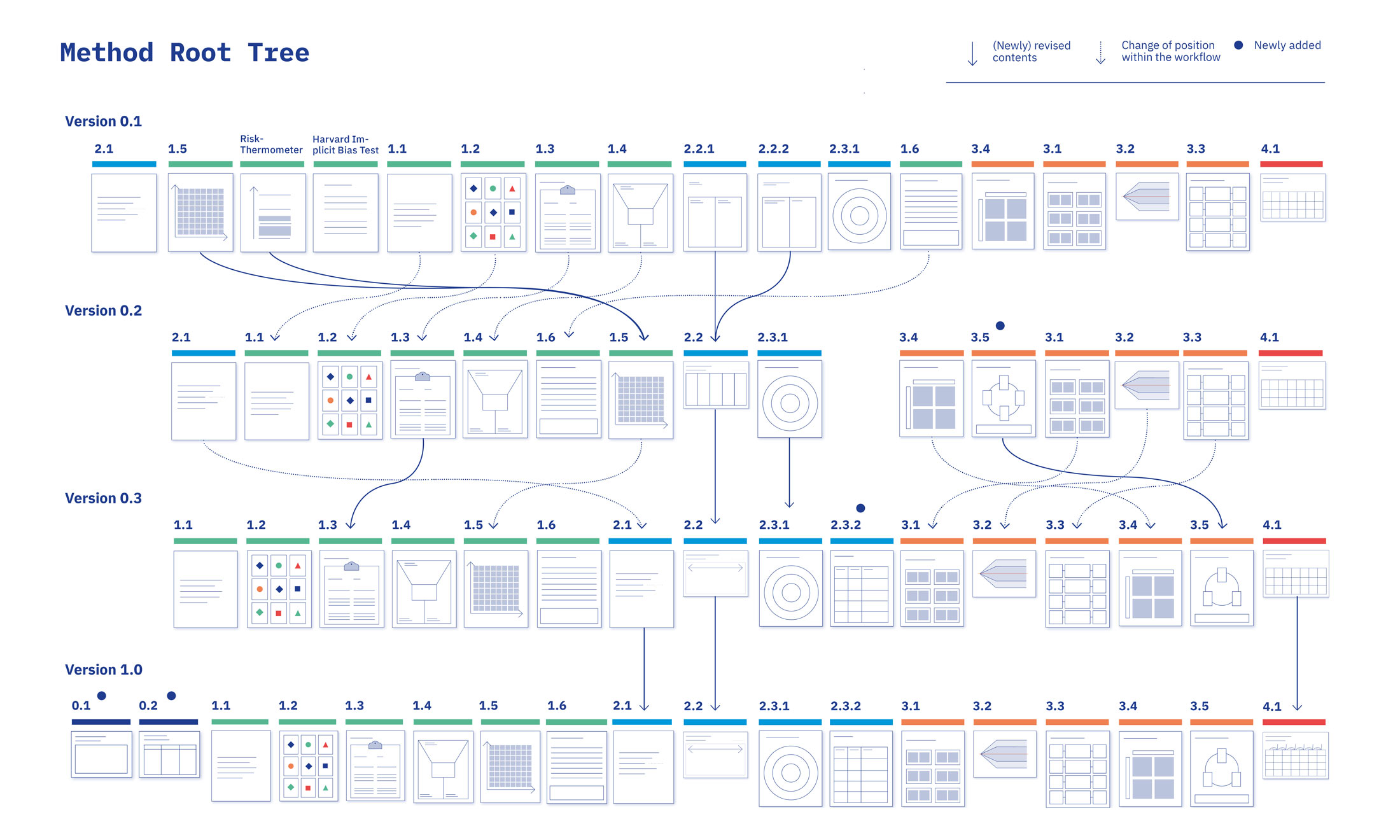

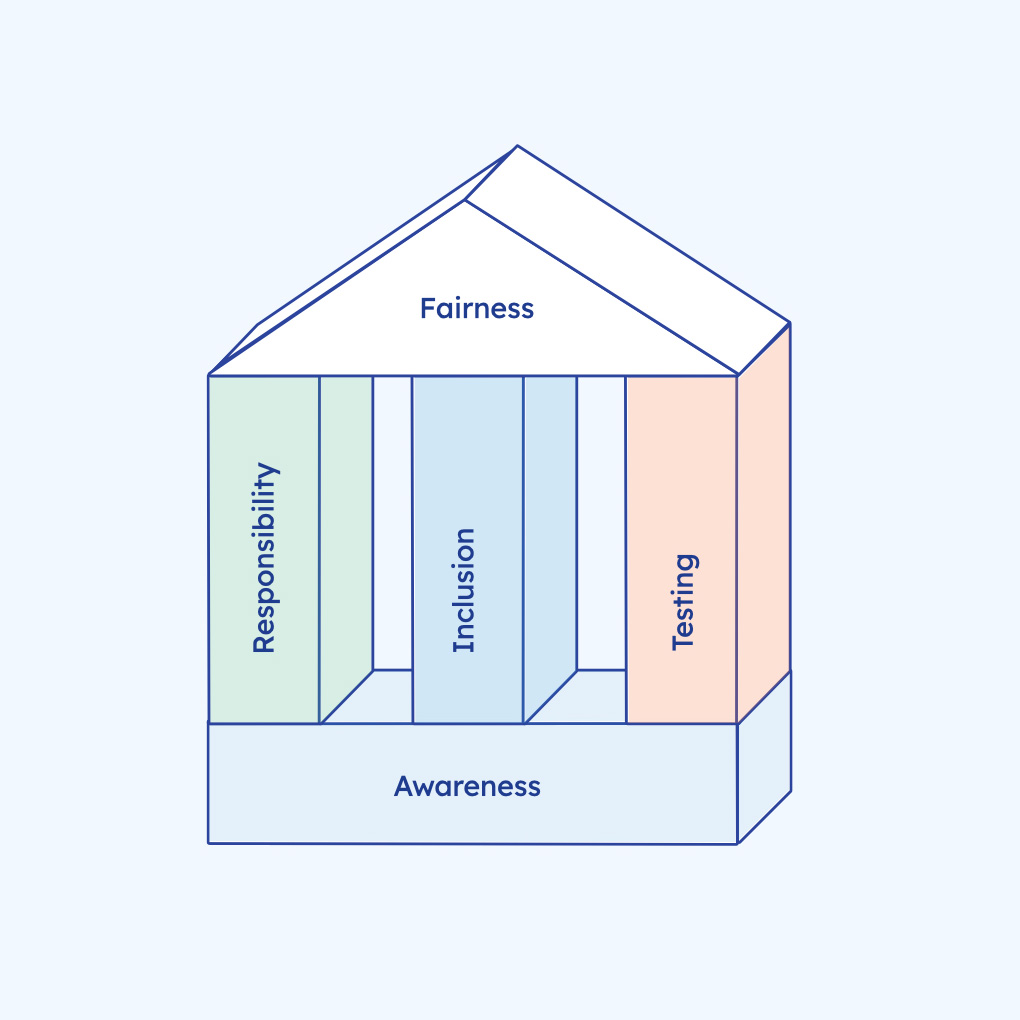

For the orientation of the toolkit, we derived the four basic principles of awareness, responsibility, inclusion and testing from our research and, based on these principles, developed various methods that were iterated through collaboration with experts from different disciplines such as psychology or computer science as well as through user testing.

The field of human-centered design encompasses many promising approaches and experiences that may also be applied to products being developed in other disciplines. We want to utilize and bring together these different perspectives to add another piece to the puzzle surrounding biases in machine learning - because in our eyes, this is an issue that needs to be covered across the spectrum.

The Coded Fairness Toolkit

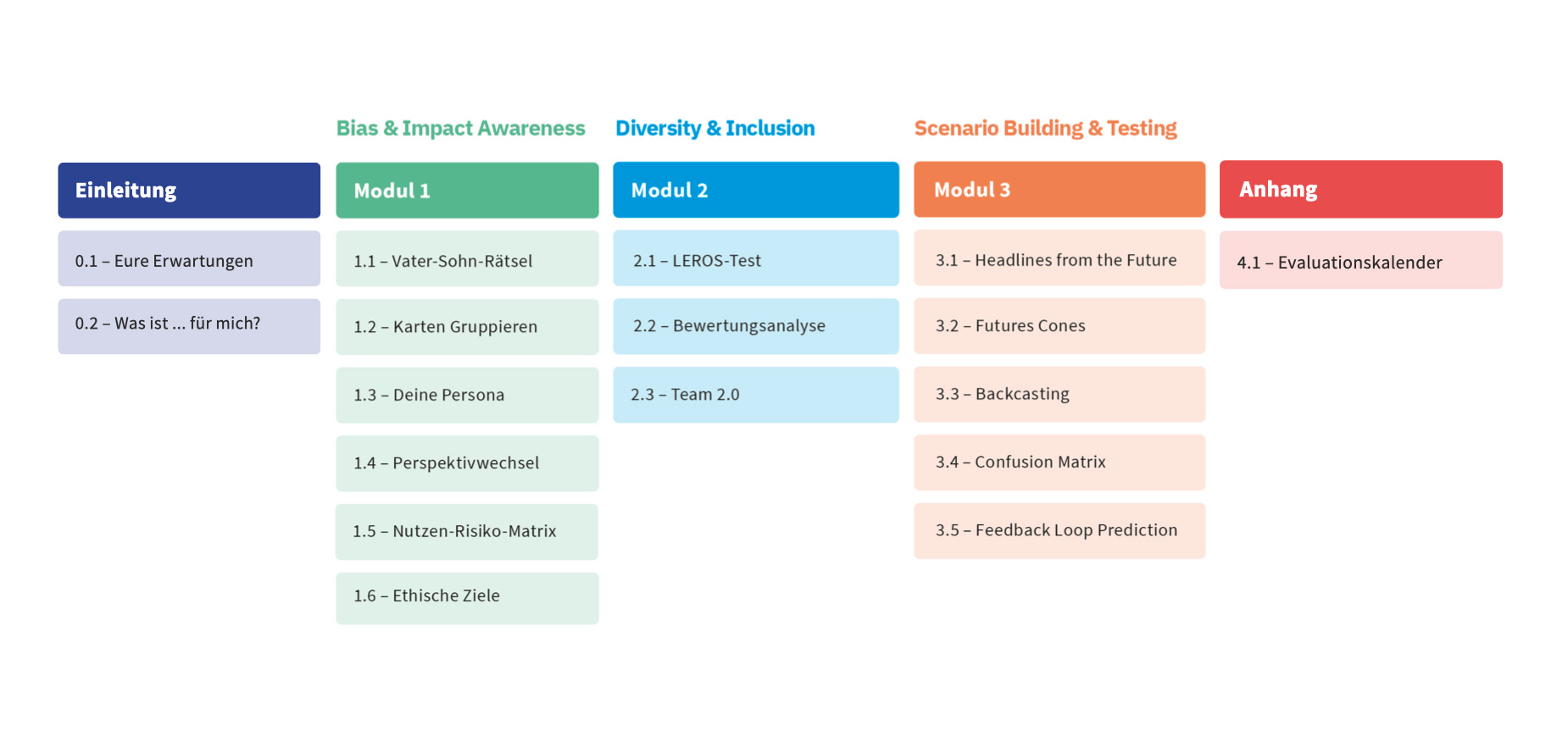

Based on these core principles, we then developed the main component of our measures, the Coded Fairness Toolkit. This is a set consisting of a total of 14 methods designed to help developers prevent biases in their systems.

The methods draw on a variety of disciplines, including psychology, sociology, computer science, human-centered design and futuring methods. By mixing a factual and personal approach in the methods, a whole new productive level of bias prevention can be achieved.